The advent of generative AI has been a wake-up call for risk management and information technology professionals. GenAI applications have been notably compared to the invention of the internet, computing, and fire, depending on who you ask.

At the same time, concerns over the risks of AI continue to grow, with many governments and organizations concerned over implicit bias trained into the models and the lack of transparency into how the models output answers.

This article examines the new era of AI regulation augured by one of the first AI frameworks, ISO 42001. We will begin by outlining how GenAI works and identifying a few fundamental issues with current systems. Following that, we'll do a synopsis of the ISO 42001 framework, as well as two other AI frameworks, before concluding with what organizations can expect going forward.

How Do Language Models Work At A High Level?

To understand why we need to manage risk around generative AI, it's first important to understand how they work at a basic level. Let's take large language models (LLMs) as an example, as they are currently all the rage among companies adopting AI.

LLMs are AI applications built on the machine learning paradigm of deep learning. To be simplistic, language models are trained to predict the next token, which you can think of as the next word:

Imagine you are asked to complete the sentence, "I'm feeling very under the _."

Your brain probably filled in the word "weather" to complete the sentence, almost automatically. AI language models are trained on unimaginably large corpora of information, with the full contents of the internet being a starting but not ending point.

The training data serves as a guide, providing language models with a statistical way to identify the fundamental data structures within language. LLMs then essentially optimize through a process called stochastic gradient descent to identify the next most likely word in every sentence and every paragraph, and can do this at scale across incredibly complex prompts.

AI & Transparency

Many claim that "we don't understand how AI works." This is both true and not true. At a conceptual level, we absolutely understand how AI works. Language models are trained across unimaginably large data sets to complete the next token using what amounts to complex matrix multiplications.

At a granular level, AI language models are a black box. To illustrate the point, let's imagine you have implemented a large model to help screen for healthcare fraud. Now imagine that after hundreds of thousands of case reviews, you notice that the language model flags people named "Amy" for fraud at 3000% the rate of every other first name.

Understanding that the model is optimizing for the next token prediction doesn't do you much good. Trying to understand why the language model is singling out Amy is like taking a satellite photo of New York City and asking why you can't use that to predict where there are going to be traffic accidents on any given day. The level of complexity is unimaginably great.

Generative AI, Bias, and AI Frameworks and Regulations

GenAI applications reflect many of the biases, errors, and cognitive traps that the average human falls into. And given the lack of transparency, this also means that we can't even understand why a language model is exhibiting bias. At the same time, the public's trust in AI is waning, with surveys showing there is an increasing concern about losing privacy.

Questions with unsatisfactory answers abound:

How do we address AI systems perpetuating existing biases present in their training data, leading to discriminatory outcomes? AI systems require vast amounts of data to work. How is our personal information being collected, stored, and used?

How are AI companies addressing the risk of their technologies being used to create misinformation? AI models require huge computational resources and massive energy consumption - what are doing to mitigate their impact on carbon emissions?

In an attempt to assuage such concerns, enter the era of AI frameworks, standards, and regulation. This can generally be marked with the creation of three new standards: ISO/IEC 42001, the EU AI Act, and the NIST AI Risk Management Framework.

1. ISO/IEC 42001

ISO 42001 is the first AI Management Systems framework to be published. The goal is to allow companies to proactively certify they are using AI safely and establish company-wide guidelines for managing AI applications. The framework directly addresses many of the concerns around AI and transparency, bias, and ethics:

The Documentation of AI Systems & Algorithmic Transparency

Organizations should maintain detailed documentation of their AI algorithms, data sources, and decision-making processes.

Recommendation engines, for example, collect data on user behavior, such as past purchases, search queries, browsing history, and more that is then used by an AI algorithm to generate recommendations for users.

Under ISO 42001, companies that deploy AI-driven recommendation engines (such as Netflix's personalized show/movie recommendations) must document the types of data from consumers it uses, the algorithm's logic, and how recommendations are generated.

Furthermore, AI systems must be designed to provide explanations for their decisions that are understandable to non-experts. For instance, an AI used for loan approval must provide applicants with a clear explanation of the factors that influenced their application decision, such as income and credit score.

Data Privacy and Security

Under ISO 42001, measures to protect data should be integrated into the use of AI. There are many factors to consider before implementing AI into systems and applications.

Furthermore, security measures mapped onto ISO 42001 data privacy and security controls help meet compliance with industry-specific data protection regulations such as HIPAA. Under both HIPAA and ISO 42001, for example, measures an AI-driven health app might take would be to encrypt user data both at rest and in transit and implement strict access controls to protect sensitive health information.

Ethical Principles Controls Under ISO 42001

ISO 42001 controls emphasize accountability and human oversight of AI. Part of this entails a clear assignment of roles and responsibilities for AI system outcomes. As an example, a company could designate a Data Protection Officer repsonsbile for overseeing the ethical use of AI and handling any issues.

AI Frameworks & Continuous Improvement Controls

One of the core tenets of ISO 42001 is continuous improvement.

Organizations should be continuously monitoring AI performance to identify areas for improvement. For instance, a healthcare AI system used for diagnosis should continuously monitor for accuracy and be updated based on the latest medical research.

2. The EU AI Act

The EU AI Act is the world's first comprehensive AI law. It was passed by the EU parliament in March 2024 and will shortly be adopted as EU law. Understanding the implications of this act is critical for businesses, as it directly addresses common concerns about the use of AI:

Risk-Based Classification

The EU AI Act introduces a risk-based classification system to regulate AI systems according to the level of risk they pose to individual users and society more broadly. The aim is to strike a balance between innovation and the need for safety and ethical considerations.

In practice, this means categorizing AI systems into four risk levels: unacceptable risk, high risk, limited risk, and minimal risk. Each category entails specific requirements to address the potential impact of the AI system. More stringent regulations are applied to AI technologies that could significantly affect human rights, safety, and well-being, while allowing a greater degree of flexibility for lower-risk applications.

An AI system controlling autonomous vehicles would be naturally considered high risk and would need to comply with stringent safety standards. Meanwhile, an AI-driven spam filter or an AI being used to help personalize content would be considered minimal risk and face less strict requirements.

Transparency, Accountability, & Data Governance

Organizations must disclose when AI is used and provide transparent information about its functionality. For example, an e-commerce website using a chatbot has to disclose to users that it's an automated system.

The EU AI Act also contains requirements around high-quality data management practices to prevent biases. A real-life example of this is that AI recruitment tools must be trained on diverse and representative datasets, with training data to include a wide range of demographic information to prevent bias against any particular group.

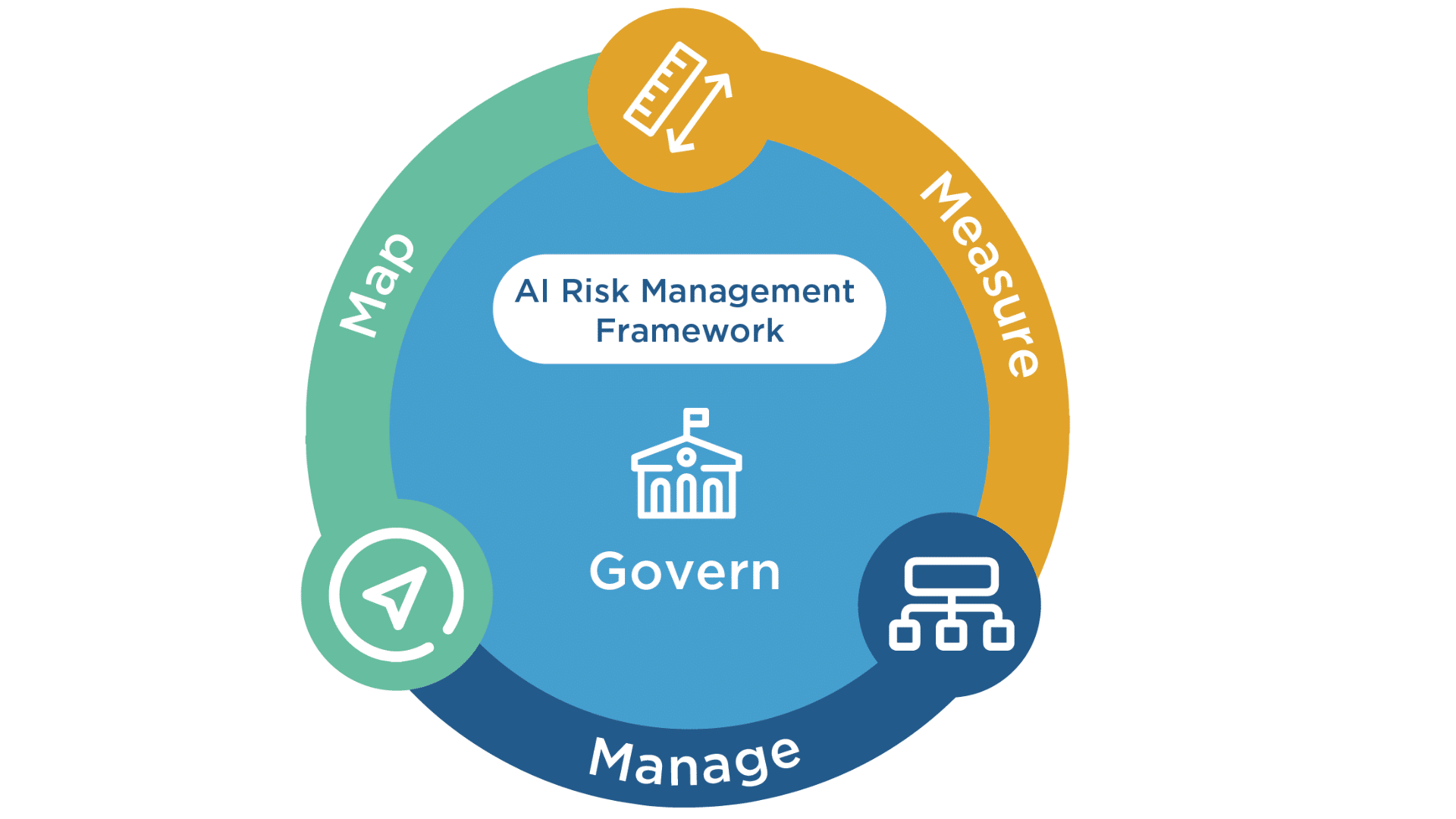

3. NIST AI Risk Management Framework

Image Source: NIST AI Risk Management Framework

The NIST AI Risk Management Framework is a voluntary, flexible framework for managing AI-related risks. It focuses on several key areas:

Governance: A Common Core Tenet of AI Frameworks

The role of governance is central under the NIST AI Risk Management Framework. This is unsurprising, as there has been a similar focus in other recent frameworks, such as Version 2.0 of the NIST Cybersecurity Framework with the addition of the NIST governance section.

Company leadership must establish procedures to oversee AI development and use. As an example, a marketing agency could document all of its AI usage policies so that employees understand if/how AI tools should be used for daily tasks such as copywriting, generating images, and social media posts.

Similar to ISO 42001 and the EU AI Act, the NIST AI Risk Management Framework also emphasizes the importance of assigning clear responsibilities for AI risk management. Businesses should designate a team member, such as the head of IT, to oversee AI usage and projects.

Risk Identification

Pinpointing the potential risks and impacts associated with AI systems is another key component of the NIST AI framework. This can take the form of conducting a simple risk assessment. For instance, an online retailer may evaluate the risks of using an AI chatbot for customer service to provide clarity on the following questions:

Are there scenarios in which the AI may fail to understand complex customer queries? How often is this projected to occur, and what would be the impact on customer satisfaction? How often might the chatbot provide incorrect information?

These are important questions to answer.

Tracking Performance Metrics & Bias Assessments

Lastly, the NIST AI framework requires organizations to assess their AI systems regularly and enact corresponding improvements. Companies should develop key performance indicators (KPIs) that are used to measure AI accuracy, performance, and bias.

Specific KPIs obviously vary depending on the industry. Let's take a healthcare provider as an example:

A medical clinic using an AI application for diagnostic support may examine the accuracy rate as a KPI by comparing AI diagnostic results with diagnoses made by human doctors. Another KPI they might assess could be false positive/negative rate - the number of instances where the AI system incorrectly identifies an illness where there is none (false positive) or misses a diagnosis (false negative).

Assessing the reliability and fairness of AI systems is a cornerstone of the three AI frameworks we've gone over in this article. By measuring outcomes, organizations can enable their AI applications to continuously improve and deliver desired results while alleviating concerns from prospects, customers, and partners.

Concluding Thoughts On AI Frameworks

The three AI frameworks discussed in this article share several common goals and approaches:

Each framework emphasizes a risk-based approach that prioritizes risk remediation based on the potential impact of AI systems. Additionally, they each stress the importance of making AI systems transparent and explainable. There is a shared emphasis on the need for ethical AI development to protect individuals and society, and each framework underscores the importance of governance in its own way.

Lastly, ISO 42001, the EU AI Act, and the NIST AI Risk Management Framework all focus on the role of continuous improvement: Adherence to AI frameworks is an ongoing process that involves embedding security functions into daily business operations. AI technologies are swiftly evolving, and organizations must be prepared to pivot their approach and adapt their policies based on change.

By implementing these frameworks, organizations can be better equipped to manage the risks associated with AI, answer their customers' and prospects' concerns about AI, and ensure their use of AI is safe and responsible.

About Rhymetec

Our experts have been disrupting the cybersecurity, compliance, and data privacy space since 2015. We make security simple and accessible so you can put more time and energy into critical areas of your business. What makes us unique is that we act as an extension of your team. We consult on developing stronger information security and compliance programs within your environment and provide the services to meet these standards. Most organizations offer one or the other.

From compliance readiness (SOC 2, ISO/IEC 27001, HIPAA, GDPR, and more) to Penetration Testing and ISO Internal Audits, we offer a wide range of consulting, security, vendor management, phishing testing services, and managed compliance services that can be tailored to your business environment.

Our team of seasoned security experts leverages cutting-edge technologies, including compliance automation software, to fast-track you to compliance. If you're ready to learn about how Rhymetec can help you, contact us today to meet with our team.

Interested in reading more? Check out other articles on our blog: